There’s this debate that goes round and round about a process that’s commonly known as responsible disclosure or in other words, notifying the owner of a system that their security sucks and giving them the opportunity to fix it rather than telling the great unwashed masses and letting them have at a vulnerable system. The theory goes that responsible disclosure is the ethical thing to do whilst airing website security dirty laundry publicly makes you an irresponsible cowboy, or something to that effect.

But of course these things are not black and white. On a daily basis I’ll see tweets about how a website is storing credentials in plain text: “Hey [insert name here], what’s the go with emailing my password, aren’t you protecting it?”. Is this “responsible”? I think it’s fair to say it’s not irresponsible, I mean the risk is obvious, right?

How about security risks such as an XSS flaw which might be a little more grey, but is still shared frequently in public? It’s not exactly going to land you the mother lode of sensitive data in a couple of clicks, but it could be used as part of a more sophisticated attack. Or move right on to the other end of the scale where serious flaws lead to serious breaches. It might be default credentials on an admin system or publicly accessible backups, the point is it’s in another realm of risk and impact altogether.

Anyway, the reason for this post is that a number of events over recent times have given me pause for what I consider responsible disclosure. These events are numerous and include incidents where I’ve ticked every responsibility box in the book and incidents where I’ve been accused of being, well, a cowboy. I wanted to capture – with as much cohesion as possible – what I consider to be responsible disclosure because sure enough, I’ll be called on this again in the future and I want to have a clear point of view that simply won’t fit into 140 characters.

I’d like to start with two disclosure stories that took very different tacks with different outcomes for both the sites and me personally. Here’s what happened:

Disclosure story 1: Westfield

Two years ago I wrote about Find my car, find your car, find everybody’s car; the Westfield’s iPhone app privacy smorgasbord. In short, the Westfield shopping centre here in Sydney down at Bondi had an app to help you find your car but it readily disclosed the license plate of every vehicle in the centre’s 2,550 car spaces via an improperly protected JSON feed. Oops.

This went straight up onto my blog and subsequently, straight into the national media. I posted it in the morning and just a few hours later the system was offline. Shortly after that I had a call from the (rather sheepish) developers of it in the US. By mid-afternoon the General Manager had commented on my blog post and later made contact personally. He called me that night and we had a very constructive discussion; there wasn’t any anger or malice, it was entirely “Holy shit, this isn’t cool, how do we fix it” which IMHO, was entirely the correct response as it lead to the system getting fixed very quickly.

tl;dr: the risk was removed almost instantly, the developers were open and receptive, the management was appreciative and hopefully developers everywhere got to learn a thing or two about securing their APIs. Perhaps most importantly though, at-risk customers were spared as quickly as possible from the potential ramifications of disclosing their personal movements.

Disclosure story 2: Black and Decker

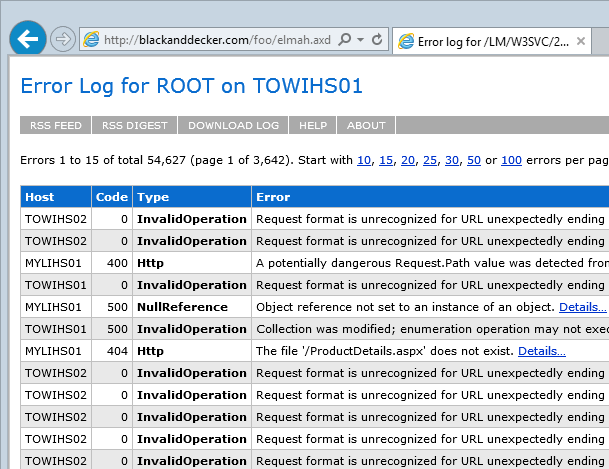

This is very timely as it all took place last week. As I wrote about just yesterday in Security is hard, insecurity is easy – demonstrating a simple misconfiguration risk, Black and Decker had exposed ELMAH logs. Their unfortunate architecture decision to store credentials in cookies (you heard me) meant these were recorded in the logs which numbered 50,000+. So here we have a veritable treasure trove of usernames, passwords, IP addresses, request headers, internal code and other titbits – clearly something to be disclosed privately and obviously given the significance they’d take it seriously and respond promptly. Or so I thought.

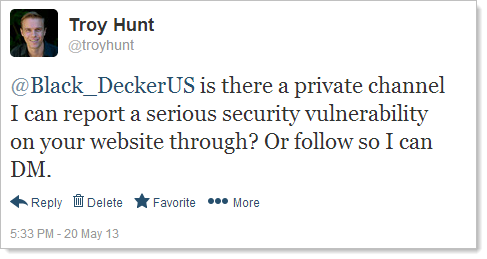

After recording the video for the aforementioned post on Monday afternoon last week (these are all Sydney times), I quickly reached out to Black and Decker on Twitter:

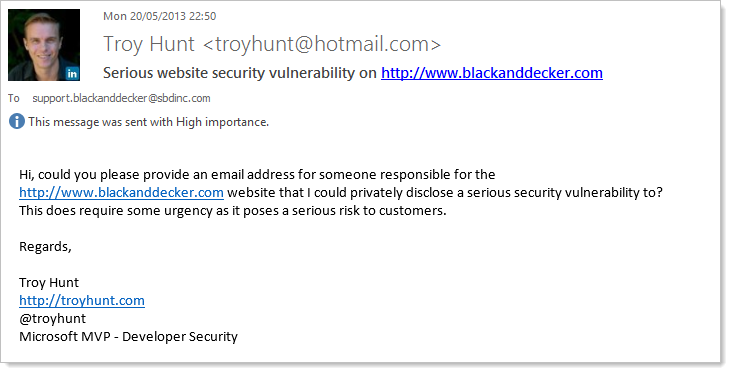

I didn’t hear anything back over the next few hours but given their Twitter account apparently doesn’t come online until 8am in Maryland (22:00 for me), that’s not surprising. Nearly an hour after they should have come online and still no contact so I send an email after finding a support address on the website:

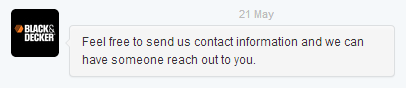

The next morning I get up and there’s a DM waiting for me that had come in at 04:12:

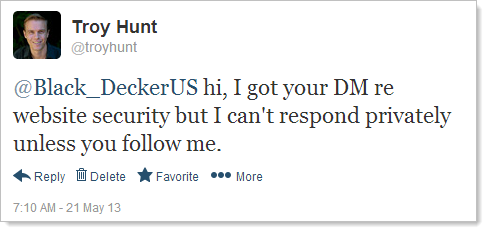

Great! So I write up a response about checking their ELMAH and… can’t send, not following. Great, back to public Twitter then:

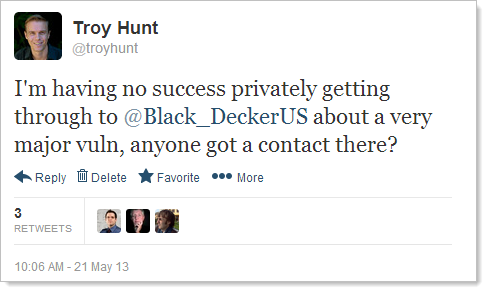

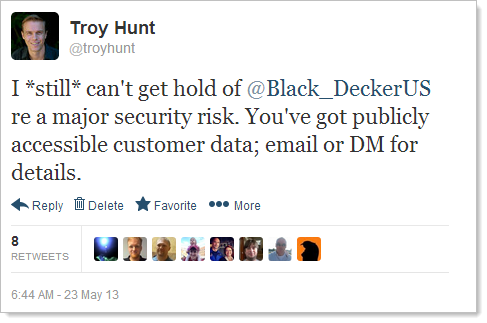

No response a few hours later so it’s back to the masses to try and get some help:

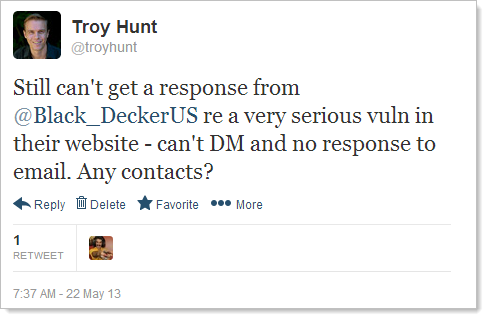

Night comes and goes, still no response so I try again the next day:

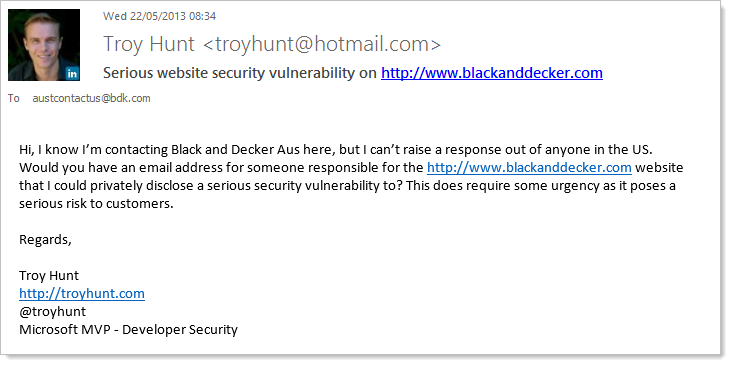

It’s now 38 hours since I’ve tried making contact through two different channels, they know there’s “a serious security vulnerability” as they’ve DM’d me (without a follow-back so I can respond privately) and I still can’t get through. Time to go local and see if someone down here can help:

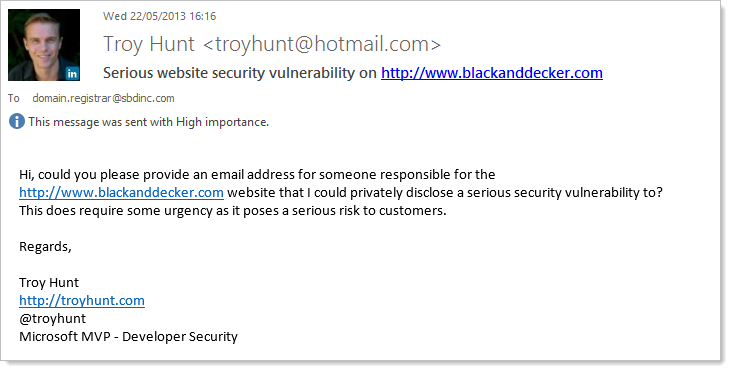

The day passes and nobody down here responds then a friendly Twitter follower suggests contacting the admin address on the DNS record so it’s back to the email again:

Still nothing come through overnight, let’s give Twitter another go a put a bit more urgency into it:

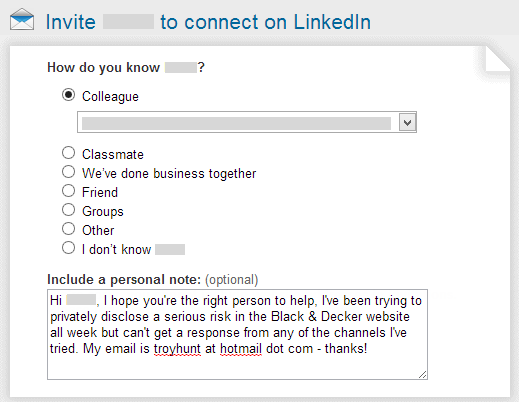

Another friendly follower suggests using LinkedIn to find someone so I jump over there and manage to find an “Information Technology and Risk Management Executive” who actually has a common previous employer to me and several common contacts. This looks like a winner:

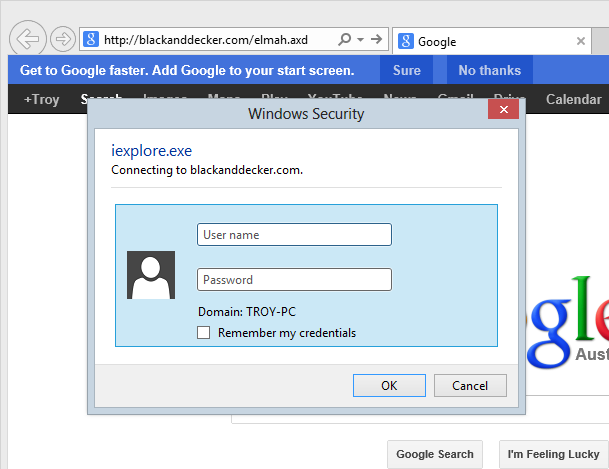

This is now the 9th time I’ve tried to get in touch with Black and Decker. Finally someone from Microsoft responds via Twitter and advises they have a relationship with Black and Decker. I privately email their @microsoft.com address on the evening of the 23rd (now the 10th contact) including the ELMAH Securing Error Log Pages link and they promptly pass it on. Come the morning of the 24th, here’s what happens:

Success! Looks like I’ve finally gotten through! Except, well, I hadn’t really:

This is a little issue that came up in my original post on session hijacking with Google and ELMAH and it’s because the handler is registered globally yet explicitly secured only when it appears in the root. It’s a simple fix that’s (now) explained very clearly on the ELMAH site (which I’d sent them), the handler just needs to be registered beneath the location in which it’s used.

So the 11th contact goes out and it’s back to Microsoft because I still haven’t had any personal contact from Black and Decker. I had a response from Microsoft just 9 minutes later even though it was about 19:30 local so kudos to them for their proactivity. Remember, this was all on the morning of the 24th (Friday last week) but by late that evening my time ELMAH was still accessible. Granted, it was evening over in the US but we’re now more than 100 hours into this saga.

Finally, come Saturday morning, ELMAH is secure. That’s four and a half days of time wasted from initial contact to securing the risk. Remember, this involved customers’ usernames and passwords on public display, no doubt in many cases the same ones they use for their email or to do their banking.

4 days after everything was resolved and 9 days since I first raised the risk, I’ve also not had any response from Black and Decker. Zero. Zip. Nada. Not a “Thanks mate” nor a “Mighty nice of you to point this out privately”, no “How might we fix this?” or “Is there anything else we should look at?”, just absolute silence. Now I don’t privately disclose in order to achieve any sort of personal benefit, but by my moral compass when you do someone a favour – particularly one that could save them a great deal of inconvenience – a little gratitude in response goes a long way.

This incident plus the Westfield one and literally dozens of others – some I’ve written about, many I’ve not – lead me to wonder: what circumstances predicate themselves to private disclosure? When is public a better option? I broke it down into three measures, let me explain.

1. What is the purpose of disclosing the risk?

Particularly when a public disclosure lands on the front page of the news and consequently on the CTO’s (or even CEO’s) desk, inevitably the question comes up – why’d he do it? What’s in it for him? What does this guy want from us?

On numerous occasions, after making a public disclosure I’ve had private responses which clearly indicate the sender is under the impression that I’m after money. You can almost read “Ok, how much do you want to help us fix this thing?” between the lines of an otherwise polite but curt response. This can never be the intent of disclosure, doing this would amount to nothing less than running an extortion racket.

The purpose cannot be self-serving, when this is the case there’s nothing to stop you from running around calling out every single vuln you find and to hell with the consequences. Whilst there’s unarguably a reputation boost from a carefully constructed public disclosure, there has to be a “greater good”.

The single most significant beneficiary of public disclosures I make are software developers. I want to help these guys understand the ins and outs of website security and nothing gets their attention more than publicly calling out examples. But just as importantly, you have to help them understand why the risks are, well, risks. Also just as importantly, you need help them understand how to fix those risks, not just stand there and give a “Ha Ha” Nelson Muntz style, that’s really not going to help anyone.

Of course the other beneficiary is those who would be impacted if the risk was exploited. If disclosing the risk will lead to the site owner rectifying it before customers can be adversely impacted, then that’s a good thing. Which leads me neatly to the second point.

2. Who is the victim if it’s exploited?

When a vuln is disclosed, naturally there is a risk that someone will then exploit it. Who is impacted if that happens is extremely important because in the scheme of exploited website risks there are really two potential victims: the users of the site and the site owner.

In this context, website users are innocent parties, they’re simply using a service and expecting that their info will be appropriately protected. Public disclosure must not impact these guys, it’s simply not fair. Dumping passwords alongside email addresses or usernames, for example, is going to hurt this group. Yes, they shouldn’t haven’t reused their credentials on their email account but they did and now their mail is pwned. That’s a completely irresponsible action on behalf of those who disclosed the info and it’s going to seriously impact ordinary, everyday people.

On the other hand, disclosing XSS or TLS flaws doesn’t pose an immediate threat to consumers, an attacker still needs to go out and weaponise the risk. This might involve the attacker constructing phishing scams or organising themselves to the extent that they can actually go and sniff packets from at-risk users. Of course this happens, but it takes some effort, at least enough effort so that the site owner can fix or disable the feature in advance.

On the other hand, risks that impact only the site owner are, in my humble opinion, fairer game. The site owner is ultimately accountable for the security position of their asset and it makes not one iota of difference that the development was outsourced or that they rushed the site or that the devs just simply didn’t understand security. When the impact of disclosure is constrained to those who are ultimately accountable for the asset, whether that impact be someone else exploiting the risk or simply getting some bad press, they’ve only got themselves to blame.

3. What is the likelihood of the risk being fixed?

There are many instances on this blog where I’ve written about poor security. There are many instances not on this blog where I’ve privately written to website owners about poor security. Overwhelmingly, risks I’ve disclosed publicly have been taken seriously and equally overwhelming, risks I’ve disclosed privately haven’t been. There are exceptions, but there is no arguing the pattern and it’s exemplified by the Westfield and Black and Decker examples I opened with.

When a risk is disclosed publicly, the website owner is on notice. There is no arguing the increased visibility public disclosure creates and there is also no arguing that it expedites the remediation process on behalf of the site owner. Partly this is due to the increased likelihood of the risk being exploited and partly it’s because in the face of public naming and shaming, the site owner is somewhat compelled to respond.

The other thing that tends to happen when a risk is made public is that the one lone voice that was the discloser suddenly becomes a chorus of like-minded individuals. For example, this tweet has now been RT’d over 2,100 times and as you’ll see from the responses, generated an awful lot of noise about the woeful state of Tesco’s security. You can’t ignore that sort of pressure (certainly the ICO didn’t) and without doubt it increases the pressure on an organisation to address their security.

Moving forward

I hope this gives some insight into my personal view of disclosure, I wouldn’t have approached Black and Decker any differently (it doesn’t satisfy rule 2 on victim impact) and likewise I wouldn’t have approached Westfield any differently (the results speak for themselves). Sometimes people will have views that differ but frankly, if I can satisfy those measures I’ve described above then I can sleep at night with confidence that on balance, security on the web has benefited.