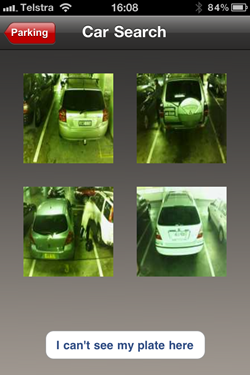

A few years ago I was taking a look at the inner workings of some mobile apps on my phone. I wanted to see what sort of data they were sending around and as it turned out, some of it was just not the sort of data that should ever be traversing the interwebs in the way it was. In particular, the Westfield iPhone app to find your car caught my eye. A matter of minutes later I had thousands of numberplates for the vehicles in the shopping centre simply by watching how this app talked over the internet:

In line with my personal views on disclosure, I published the blog mentioned above and a media furore followed; how on earth can a company be so careless with our personal data?! Why wasn’t this identified earlier?

The thing is, this sort of thing is both very common and very easily identified in your apps or anyone else’s apps for that matter and that’s exactly what I set out to show you in my latest Pluralsight course Hack Your API First.

API all the things!

If you believe what you read, the average “Internet of Things” device has 25 security flaws. The what now?! You know, the IoT – that idea where your fridge needs to talk to your toaster because, well, uh, “reasons”. But seriously, IoT is becoming a big thing and like many big things in tech, there’s a gold rush to create these new devices and we all know that one of the first things that gets overlooked in a tech boom is security.

Let me give you some examples: You know how you always wished your toilet was connected? You heard me, a dunny that can talk to your phone. No really, it’s a thing, it looks like this:

I actually used this in some security talks earlier last year and hypothesised that we may well be heading into an era of infected toilets (one person very eloquently summed it up as a toilet that could back-door you). Anyway, as it turns out, there did indeed follow a security advisory by Trustwave in August of last year. There you go, patch your toilets folks!

Ok, probably not many of us are in a rush to go out and connect the smallest room in the house, but how about this one; wouldn’t it be awesome to have connected light globes like these:

These really do rock – use your phone to adjust not just the brightness, but the colour of the lights as well so that you can set the mood of your environment. Everything about this is awesome, except for the bit that allowed hackers to steal your wifi credentials via them. Oops. What’s particularly poignant about this whole thing is their security update which states “No LIFX users have been affected that we are aware of”. Well of course not that you’re aware of because who on earth discovers that their neighbours have been torrenting over their wifi then thinks “Oh, it must be that my light globes have disclosed my WPA2 password”. Interesting times we live in.

We’re in so much of a rush to connect our “things” and be the first to market with the new shiny that we’re often missing the security fundamentals. But that doesn’t mean that you can’t identify them yourself, you’ve just got to know where to look.

Hiding API security vulnerabilities in plain sight

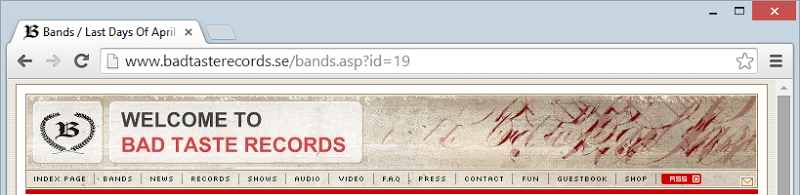

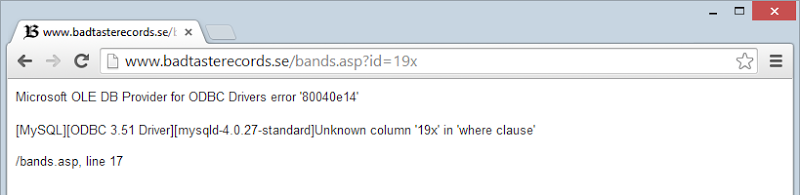

Here’s the problem with mobile app security today and I’m going to demonstrate it by showing you a web app in a browser.

Does this website look like it might have a SQL injection risk? It’s a classic ASP website with a query string parameter so the chances are higher than average:

We can establish a likelihood just by adding a single character to the URL:

And that’ll do it – a combination of visual observation of the URL and the addition of a single character shows that at best, they’re not properly handling input. At worst, well… (incidentally, this was previously brought to their attention)

Now let’s try this with a mobile app – does this one have a possible SQL injection risk in the web API?

You have absolutely no idea, at least not by looking at the app. It’s communicating over HTTP and with that comes all the risks associated with HTTP, but the communication isn’t transparent – you can’t see what’s going on. It may have a SQL injection risk, I don’t know from what I’m seeing here but I do know how to find out and that’s what this course is all about.

About the course

In a nutshell: Vulnerabilities in mobile apps are everywhere but they’re not as immediately apparent as in their browser-based counterparts. However, it’s easy to identify these risks simply by monitoring how your device (and obviously the apps on it) talk over the internet to web service back ends. It’s also trivial to change how the services respond to the device which often then has an adverse security impact on the app such as enabling features that shouldn’t be accessible.

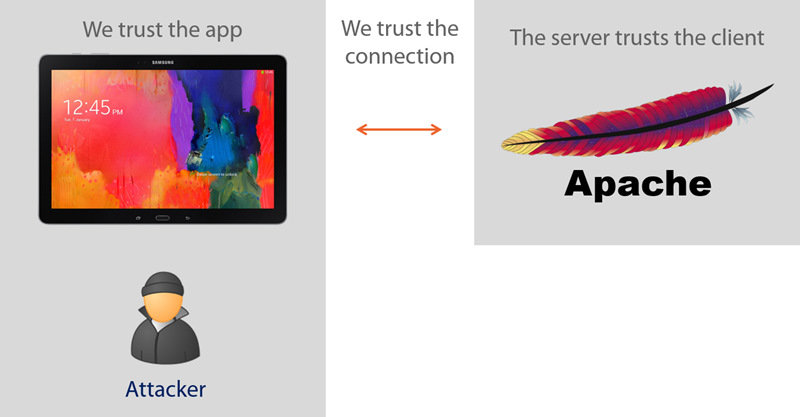

Much of the premise of this course is based around the trust assumptions developers make. For example, they build the app and they build the back end service and because they “own” both components they trust each to behave in the way they designed them. They may even implement SSL to encrypt the connection with the assumption that this will prohibit observation or tampering of the data. All the assumptions in this paragraph are false and the course walks through exactly why in explicit detail.

Hacking yourself first (on any device)

I wrote this course as a successor to one of my most successful – Hack Yourself First: How to go on the Cyber-Offense. This course remains enormously popular one year on from launch and I suspect that’s mostly because it’s so practical. It takes developers through how to identify risks, how to exploit them then what the secure patterns look like. It does all this from the hacker’s perspective, that is someone who is external to the system and can only observe from the outside yet knows the vulnerable patterns to look for.

One of the great things about this approach is that it’s technology agnostic, that is it doesn’t matter what your framework of choice is, if it’s pushing angle brackets over HTTP (or JSON or HTTPS, for that matter), it’s equally relevant whether you’re an iOS and PHP dev or an ASP.NET and Win Phone fanatic.

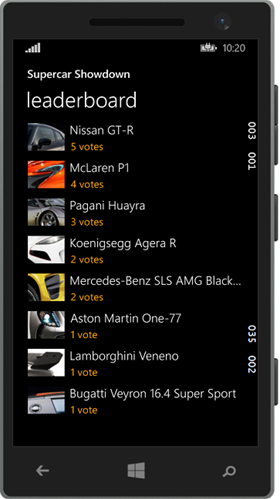

As with the Hack Yourself First course, there is a vulnerable app to play with and it includes the same site as before over at hackyourselffirst.troyhunt.com. Of course there are now a few more APIs on it but it’s the same site with the same sample scenario (rating your favourite supercars) so it will be familiar to many people. Complementing the back end is an all new vulnerable mobile app which I’ve wrangled up as a Win Phone 8.1 app:

This is the app running in the emulator in Visual Studio (an excellent emulator at that, I might add), and as the course progresses, it’s this app whose traffic is observed, has risks identified then vulnerabilities exploited. But the course doesn’t stop at the hypothetical, it looks at real world apps and how they’ve implemented security both in terms of good security and bad security. It’s amazing how prevalent these risks are in live apps running on thousands or even millions of devices, let me give you a perfect example.

In mobile, vulnerabilities are falling out of the cloud

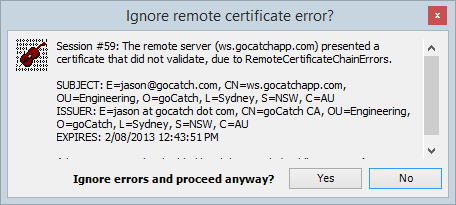

A significant portion of the apps I look at on my iPhone have serious security vulnerabilities, far more so than their browser-based brethren. It’s almost like the risks literally drop out of the proverbial cloud on which their back ends are hosted. In fact this is exactly what happened whilst I was recording the course and looking for a good example of security. Whilst monitoring the traffic in Fiddler (there’s a lot of Fiddler in this course), I opened up the goCatch taxi app and saw this:

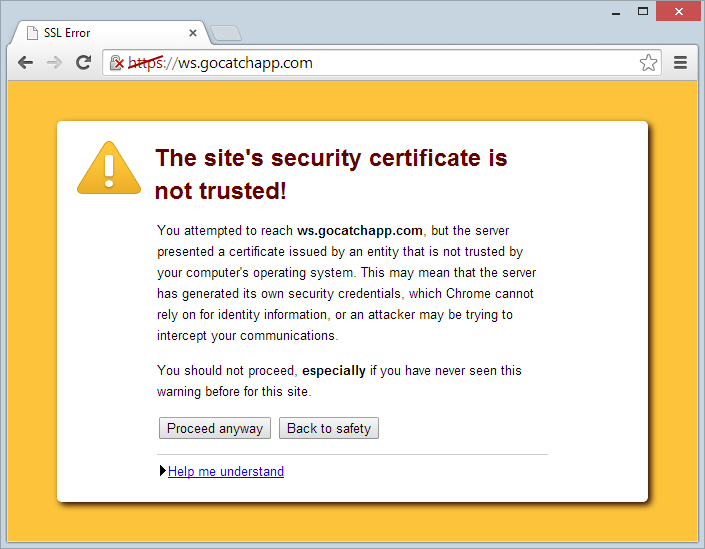

Wait – what?! This isn’t a very common sight even in the vulnerability-riddled world that is mobile apps, what on earth is going on here?! I took the host name that the service was talking to and plugged it into Chrome:

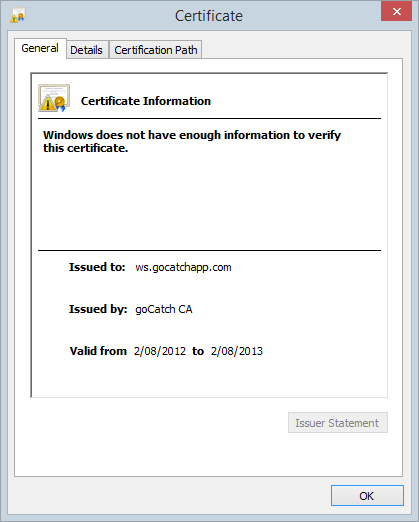

Well that doesn’t look right at all – browsers are pretty good these days at showing MASSIVE warnings when there’s something wrong with the site’s certificate, let’s take a closer look at it:

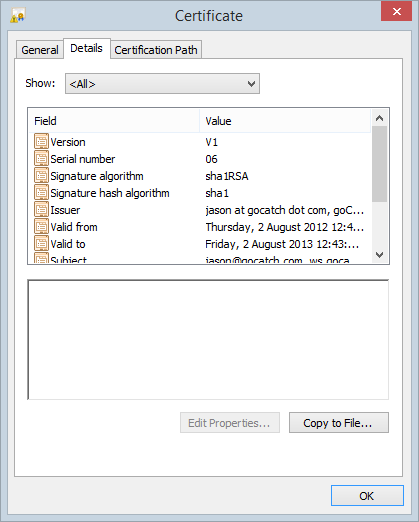

Hang on, there’s no “goCatch CA” – crikey, that’s a self-signed certificate! Now this is significant because what it means is that rather than having gone to a certificate authority and purchased (or gotten for free) an SSL cert which the device then validates against a trusted list of CAs, someone (assumedly a bloke called Jason based on the Fiddler error message) has just created their own and loaded it into production!

Let’s take a closer look at the details:

This was around July 2014 so even the validity period had passed and no, the app wasn’t doing any sort of validation of the cert whatsoever. The significance, of course, is that the very risk goCatch set out to mitigate with HTTPS – a “man in the middle” attack – was left completely wide open because if an attacker could get in the middle of the communication they could simply intercept the traffic, add their own self-signed certificate and the app would be happy. Hey, it’s talking over HTTPS, everything must be ok right? Guys? Hello…?

Now I do want to point out here that goCatch were excellent in handling this when I reported it to them privately. They responded quickly over Twitter with a means of privately contacting them, I detailed the risk in email and they immediately got it. They were receptive, appreciative and as of the time of writing, have fully rectified the issue – there’s now a valid cert from DigiCert and the app validates it such that you can’t just drop your own self-signed one in and MitM the traffic (at least not without compromising the device, but that’s another story I go into in the course).

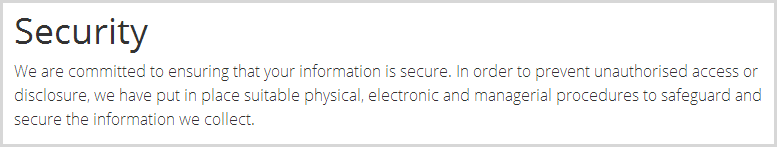

A poignant observation I also want to make here is that the guys understood this risk and certainly it worried them when they realised the app had a serious vulnerability. Yet even at the time of discovery, their privacy policy was very clear about them having “suitable physical, electronic and managerial procedures to safeguard and secure the information we collect”:

I’ve no doubt that was their intent, yet the execution missed this in a key area. By the same token, I was (and still am) a very happy goCatch user and I had absolutely no idea that behind the veneer of that rich client app they’d made a fundamental mistake with their transport layer encryption. But of course because it was an API behind a mobile app with the risk, that massive yellow warning message like in Chrome never appeared, the app simply gobbled up the fact that it was an invalid cert. All it would have taken is just a few minutes testing the services for risks and this would have been discovered. Not to put too fine a point on it, but that’s exactly why I wrote this course.

Just one more thing…

Just this week, every major news outlet has been seemingly infatuated with this story:

In one day this week, I did 3 radio interviews, had a TV news crew in my office and answered numerous media queries via email – it’s been a massive story. And let’s face it, by all accounts it’s a pretty serious incident but the relevance here is this statement:

According to BuzzFeed, the hacker accessed the photos thanks to an iCloud leak that allowed the celebrities' phones to be hacked

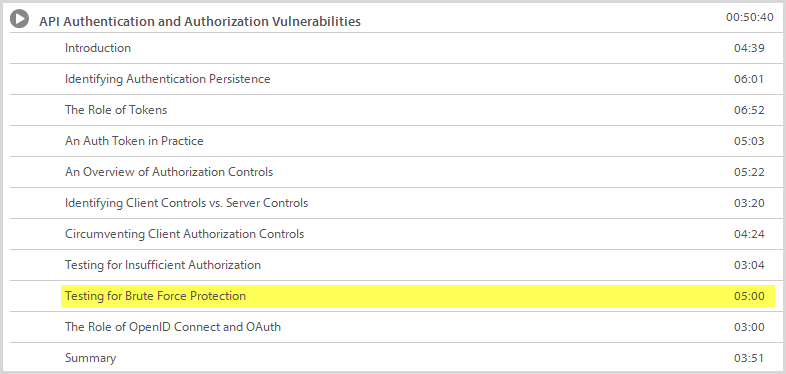

Software vulnerability you say? Well actually it’s more specific to the context here than the title implies as it turns out that Apple had a brute force vulnerability in one of their APIs. It’s not yet entirely clear whether that was the attack vector in this case (Apple claims it was poor password practices on behalf of the victims which, of course, is exactly the weakness that a brute force attack would target), but it is clear that they did indeed have a risk and that the iBrute code mentioned in that article successfully exploited it.

If only we had some good material to help us identify this risk in mobile apps… oh hang on:

All jokes aside, the point is that brute force attacks are a well-known risk and they’re easy to test against, even when the vulnerability is in a mobile API behind a rich client app. It’s not dissimilar to the point I made about the goCatch SSL – it’s readily identifiable with easy mitigations, it’s a question of awareness on behalf of the developers and then proactively seeking out any occurrences of it.

Next step: Do course, write awesome secure apps

Just as much as the next guy (and probably even more), I love the levels of connectedness all my “things” are getting. I have Withings scales and Apple TVs (yes, plural) and of course dozens and dozens of mobiles apps on iPhone and iPad plus an increasing array on my Windows 8 machines, many of them relying on the web to communicate with back ends.

I’d also love to have the level of connectedness you can get with a Tesla, I’ll probably buy an iWatch (if such a thing arrives) and I’m going to keep downloading mobile apps left, right and centre. But as much as anyone, I’m overtly aware of how easy it is to introduce risks even when security genuinely is important to the developers. If each developer of these services could take just a few hours (ok, 4 hours and 7 minutes) to learn the common vulnerability patterns then invest just a little time to check their apps, we’ll all be able to move forward into the connected future worrying a lot less about hackers taking over our toilets and our light globes.