It seems like every manufacturer of anything electrical that goes in the house wants to be part of the IoT story these days. Further, they all want their own app, which means you have to go to gazillions of bespoke software products to control your things. And they're all - with very few exceptions - terrible:

That's to control the curtains in my office and the master bedroom, but the hubs (you need two, because the range is rubbish) have stopped communicating.

That one is for the spa, but it looks like the service it's meant to authenticate to has disappeared, so now, you can't.

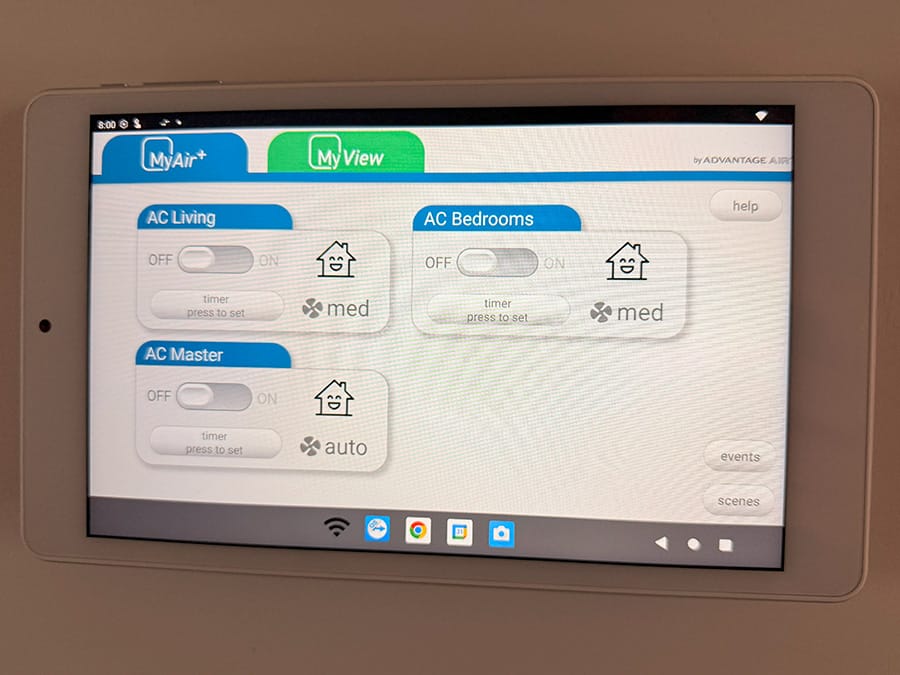

And my most recent favourite, Advantage Air, which controls the many tens of thousands of dollars' worth of air conditioning we've just put in. Yes, I'm on the same network, and yes, the touch screen has power and is connected to the network. I know that because it looks like this:

That might look like I took the photo in 2013, but no, that's the current generation app, complete with Android tablet now fixed to the wall. Fortunately, I can gleefully ignore it as all the entities are now exposed in Home Assistant (HA), then persisted into Apple Home via HomeKit Bridge, where they appear on our iThings. (Which also means I can replace that tablet with a nice iPad Mini running Apple Home and put the Android into the server rack, where it still needs to act as the controller for the system.)

Anyway, the point is that when you go all in on IoT, you're dealing with a lot of rubbish apps all doing pretty basic stuff: turn things on, turn things off, close things, etc. HA is great as it abstracts away the crappy apps, and now, it also does something much, much cooler than just all this basic functionality...

Start by thinking of the whole IoT ecosystem as simply being triggers and actions. Triggers can be based on explicit activities (such as pushing a button), observable conditions (such as the temperature in a room), schedules, events and a range of other things that can be used to kick off an action. The actions then include closing a garage door, playing an audible announcement on a speaker, pushing an alert to a mobile device and like triggers, many other things as well. That's the obvious stuff, but you can get really creative when you start considering devices like this:

That's a Sonoff IoT water valve, and yes, it has its own app 🤦♂️ But because it's Zigbee-based, it's very easy to incorporate it into HA, which means now, the swag of "actions" at my disposal includes turning on a hose. Cool, but boring if you're just watering the garden. Let's do something more interesting instead:

The valve is inline with the hose which is pointing upwards, right above the wall that faces the road and has one of these mounted on it:

That's a Ubiquiti G4 Pro doorbell (full disclosure: Ubiquiti has sent me all the gear I'm using in this post), and to extend the nomenclature used earlier, it has many different events that HA can use as triggers, including a press of the button. Tie it all together and you get this:

Not only does a press of the doorbell trigger the hose on Halloween, it also triggers Lenny Troll, who's a bit hard to hear, so you gotta lean in real close 🤣 C'mon, they offered "trick" as one of the options!

Enough mucking around, let's get to the serious bits and per the title, the AI components. I was reading through the new features of HA 2025.8 (they do a monthly release in this form), and thought the chicken counter example was pretty awesome. Counting the number of chickens in the coop is a hard problem to solve with traditional sensors, but if you've got a camera that take a decent photo and an AI service to interpret it, suddenly you have some cool options. Which got me thinking about my rubbish bins:

The red one has to go out on the road by about 07:00 every Tuesday (that's general rubbish), and the yellow one has to go out every other Tuesday (that's recycling). Sometimes, we only remember at the last moment and other times, we remember right as the garbage truck passes by, potentially meaning another fortnight of overstuffing the bin. But I already had a Ubiquiti G6 Bullet pointing at that side of the house (with a privacy blackout configured to avoid recording the neighbours), so now it just takes a simple automation:

- id: bin_presence_check

alias: Bin presence check

mode: single

trigger:

- platform: state

entity_id: binary_sensor.laundry_side_motion

to: "off"

for:

minutes: 1

condition:

- condition: time

weekday:

- mon

- tue

action:

- service: ai_task.generate_data

data:

task_name: Bin presence check

instructions: >-

Look at the image and answer ONLY in JSON with EXACTLY these keys:

- bin_yellow_present: true if a rubbish bin with a yellow lid is visible, else false

- bin_red_present: true if a rubbish bin with a red lid is visible, else false

Do not include any other keys or text.

structure:

bin_yellow_present:

selector:

boolean:

bin_red_present:

selector:

boolean:

attachments:

media_content_id: media-source://camera/camera.laundry_side_medium

media_content_type: image/jpeg

response_variable: result

- service: "input_boolean.turn_{{ 'on' if result.data.bin_yellow_present else 'off' }}"

target:

entity_id: input_boolean.yellow_bin_present

- service: "input_boolean.turn_{{ 'on' if result.data.bin_red_present else 'off' }}"

target:

entity_id: input_boolean.red_bin_presentOk, so it's a 40-line automation, but it's also pretty human-readable:

- When there's motion that's stopped for a minute...

- And it's a Monday or Tuesday...

- Create an AI task that requests a JSON response indicating the presence of the yellow and red bin...

- And attach a snapshot of the camera that's pointing at them...

- Then set the values of two input booleans

From that, I can then create an alert if the correct bin is still present when it should be out on the road. Amazing! I'd always wanted to do something to this effect but had assumed it would involve sensors on the bins themselves. Not with AI though 😊

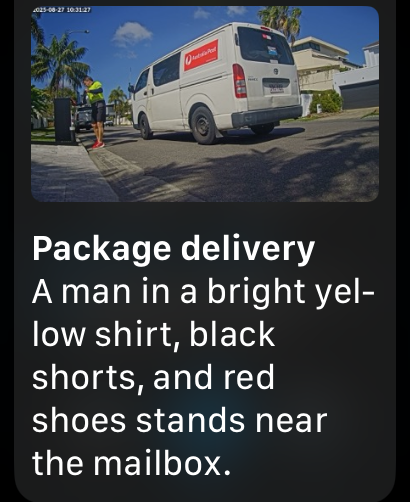

And then I started getting carried away. I already had a Ubiquiti AI LPR (that's a "license plate reader") camera on the driveway and it just happened to be pointing towards the letter box. Now, I've had Zigbee-based Aqara door and window sensors (they're effectively reed switches) on the letter box for ages now (one for where the letters go in, and one for the packages), and they announce the presence of mail via the in-ceiling Sonos speakers in the house. This is genuinely useful, and now, it's even better:

I screen-capped that on my Apple Watch whilst I was out shopping, and even though it was hard to make out the tiny picture on my wrist, I had no trouble reading the content of the alert. Here's how it works:

- id: letterbox_and_package_alert

alias: Letterbox/Package alerts

mode: single

trigger:

- id: letter

platform: state

entity_id: binary_sensor.letterbox

to: "on"

- id: package

platform: state

entity_id: binary_sensor.package_box

to: "on"

variables:

event: "{{ trigger.id }}" # "letter" or "package"

title: >-

{{ "You've got mail" if event == "letter" else "Package delivery" }}

message: >-

{{ "Someone just left you a letter" if event == "letter" else "Someone just dropped a package" }}

tts_message: >-

{{ "You've got mail" if event == "letter" else "You've got a package" }}

file_prefix: "{{ 'letterbox' if event == 'letter' else 'package_box' }}"

file_name: "{{ file_prefix }}_{{ now().strftime('%Y%m%d_%H%M%S') }}"

snapshot_path: "/config/www/snapshots/{{ file_name }}.jpg"

snapshot_url: "/local/snapshots/{{ file_name }}.jpg"

action:

- service: camera.snapshot

target:

entity_id: camera.driveway_medium

data:

filename: "{{ snapshot_path }}"

- service: script.hunt_tts

data:

message: "{{ tts_message }}"

- service: ai_task.generate_data

data:

task_name: "Mailbox person/vehicle description"

instructions: >-

Look at the image and briefly describe any person

and/or vehicle standing near the mailbox. They must

be immediately next to the mailbox, and describe

what they look like and what they're wearing.

Keep it under 20 words.

attachments:

media_content_id: media-source://camera/camera.driveway_medium

media_content_type: image/jpeg

response_variable: description

- service: notify.adult_iphones

data:

title: "{{ title }}"

message: "{{ (description | default({})).data | default('no description') }}"

data:

image: "{{ snapshot_url }}"This is really helpful for figuring out which of the endless deliveries we seem to get are worth "downing tools" for and going out to retrieve mail. Equally useful is the most recent use of an AI task, recorded just today (and shared with the subject's permission):

Like packages, we seem to receive endless visitors and getting an idea of who's at the door before going anywhere near it is pretty handy. We do get video on phone (and, as you can see, iPad), but that's not necessarily always at hand, and this way the kids have an idea of who it is too. Here's the code (it's a separate automation that plays the doorbell chime):

- id: doorbell_ring_play_ai

alias: The doorbell is ringing, use AI to describe the person

trigger:

platform: state

entity_id: binary_sensor.doorbell_ring

to: 'on'

action:

- service: ai_task.generate_data

data:

task_name: "Doorbell visitor description"

instructions: >-

Look at the image and briefly describe how many people you see and what they're wearing, but don't refer to "the image" in your response.

If they're carrying something, also explain that but don't mention it if they're not.

If you can recognise what job they might, please include this information too, but don't mention it if you don't know.

If you can tell their gender or if they're a child, mention that too.

Don't tell me anything you don't know, only what you do know.

This will be broadcast inside a house so should be conversational, preferably summarised into a single sentence.

attachments:

media_content_id: media-source://camera/camera.doorbell

media_content_type: image/jpeg

response_variable: description

- service: script.hunt_tts

data:

message: "{{ (description | default({})).data | default('I have no idea who is at the door') }}"I've been gradually refining that prompt, and it's doing a pretty good job of it at the moment. Hear how the response noted his involvement in "detailing"? That's because the company logo on his shirt includes the word, and indeed, he was here to detail the cars.

This is all nerdy goodness that has blown hours of my time for what, on the surface, seems trivial. But it's by playing with technologies like this and finding unusual use cases for them that we end up building things of far greater significance. To bring it back to my opening point, IoT is starting to go well beyond the rubbish apps at the start of this post, and we'll soon be seeing genuinely useful, life-improving implementations. Bring on more AI-powered goodness for Halloween 2025!

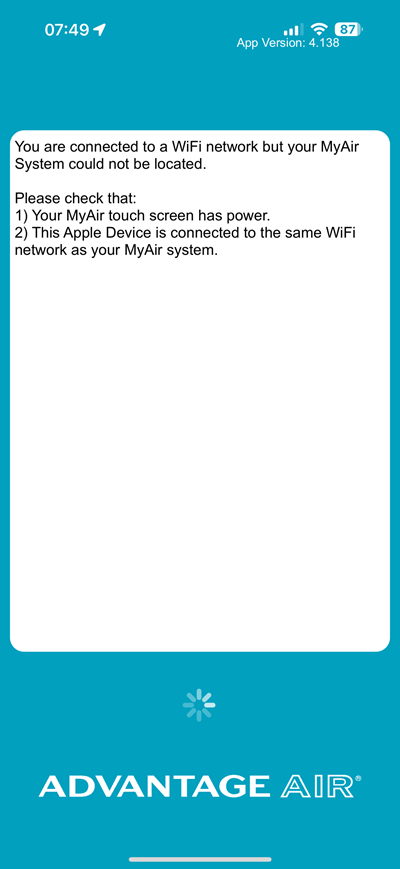

Edit: I should have included this in the original article, but the ai_task service is using OpenAI so all processing is done in the cloud, not locally on HA. That requires and API key and payment, although I reckon that pricing is pretty reasonable (and the vast majority of those requests are from testing):