Clearly, Sony Pictures has had a rather bad time of it lately. First there were the threats from the alleged attackers, then the beginning of internal data dumps that now total tens of GB already, then the embarrassing internal email leaks, then the threats of 9/11 style attacks and now pulling the launch of “The Interview” because allegedly, the North Koreans don’t share their sense of humour. This is, without a doubt, the bizarrest of hacks in an industry where bizarre is par for the course.

One of the things that keeps hitting the headlines is how bad Sony’s security practices are (or at least “were”, apparently they’re back to fax machines now). But there’s that whole “stones and glass houses” thing which last night, prompted me to suggest this:

Now how many people think the practices we're all ridiculing Sony for are exceptional and not just the norm in large corporates...?

— Troy Hunt (@troyhunt) December 18, 2014 This is a very uncomfortable truth. Yes, many of Sony’s practices were atrocious and yes, they deserve to be raked over the coals for them, but are they the exception? Or the norm? I say it’s far more the latter than the former, let me show you what I mean and how you can identify the same risks in your organisation that are probably going to cost Sony hundreds of millions of dollars.

How attackers get in

Before getting into the detail of the sort of things you want to be looking for behind your own corporate firewall, let’s just establish the threat we’re talking about.

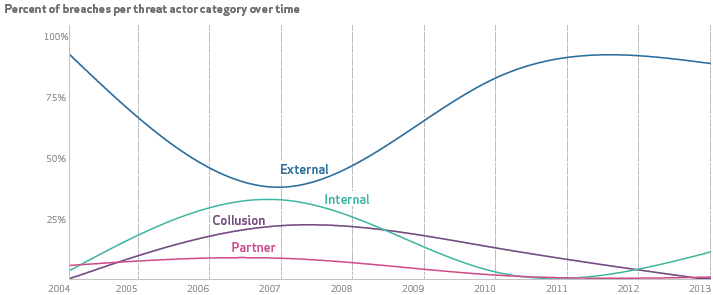

Obviously there are, on occasion, “bad actors” within the organisation. Individual employees motivated usually by greed or vengeance but perhaps as may even be the case with Sony, idealism. In fact conventional wisdom held that a significant portion of attacks against the enterprise came from within but according to Verizon’s latest data breach investigations report, that’s not really the case anymore:

Even though that internal category is very low, it only takes one disgruntled employee in one corner of a very large organisation like Sony for things to go very bad very quickly when they have access to too much information.

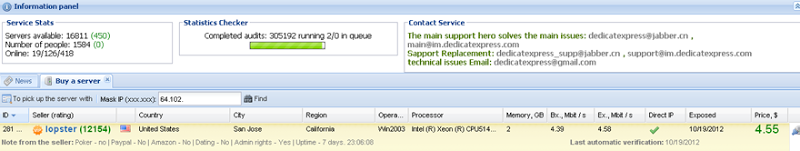

The other serious problem though is not compromised individuals in the organisation, rather compromised machines. Last year I wrote Your corporate network is already compromised: are your internal web apps ready for attackers? which included a heap of info on risks behind the firewall. This included the Reuters video on how every large organisation has been penetrated by attackers, the willingness with which staff will plug random USB sticks found in the parking lot into their machines and this particularly intriguing piece from Brian Krebs on services selling direct access into Fortune 500 organisations. There’s even a nice little web UI for the experience:

The antivirus you’re running that many view as “security in a box”? Well firstly, it can’t possibly keep up with the hundreds of thousands of new viruses seen every day and secondly, that link talks about an absolutely woeful detection rate:

The average detection rate for these samples was 24.47 percent, while the median detection rate was just 19 percent. This means that if you click a malicious link or open an attachment in one of these emails, there is less than a one-in-five chance your antivirus software will detect it as bad.

In fact in the Sony case, there are reports that the attack did indeed involve malware and that as I’ve suggested above, it was “undetectable”. Any assumption short of always assuming the network perimeter is already compromised is folly.

The point is that even if every single individual in the organisation has nothing but the best of intentions, some number of them will always have malicious software running on their machine and doing an attacker’s bidding. What they have access to, the attacker has access to and it begs the question – what do you have access to in your organisation? Let’s take a look.

Sensitive data on the company intranet

We’ll start somewhere obvious – the stuff on your intranet. Go and have a trawl around just how much stuff you have access to and consider, if you will, how much of it would be considered sensitive if it were to be leaked into the public domain. Lists of colleagues and personal contact info? Check. Passport quality photos of employees? Check. Arguably that’s necessary information for most people to do their job though, so let’s move on.

Documents are one of the big worries and the ease of intranet collaboration tools where those PDFs and Office docs can be so easily uploaded and distributed mean there’s inevitably a heap of stuff that shouldn’t be there. Try searching for something as simple as “finance” or “procurement” and amidst an inevitably long list of results, consider what it might mean for some of those juicier docs to be leaked. They’re pretty innocuous search terms and the results usually disclose titles and abstracts that are enough to establish that it’s info you probably don’t want out there Sony-style.

Oh – and you know those disclaimers on PowerPoint presos that say “company sensitive info”? Yeah, that doesn’t actually do anything other than politely imply that you shouldn't consciously share the file willy nilly! Although it does make it rather easy to search for sensitive material…

Administrative rights on servers

A common theme with security in general is missing or excessive access rights. Behind the firewall it’s really easy to make the assumption that everyone is a good guy but as we’ve already established, that’s not always the case and even the good guys may be infected with bad software.

One particularly risky area for excessive rights is admins on servers. We think this should be well secured and we think this is carefully monitored in the enterprise, but don’t underestimate the allure of a “quick fix” by just dropping individuals, groups or even all authenticated users into the local administrators group (don’t laugh, it happens).

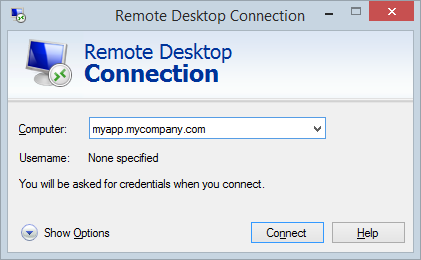

Anyone can discover if their identity has the right to remotely log in to a server, just fire up a Remote Desktop Connection and plug in the URL of the app:

As an individual, it’s dead easy to discover what you have access to in this regard. It doesn’t always mean you’ll have local administrative privileges, but it’ll usually mean you have access to a whole bunch of content you simply shouldn’t have. Consider what’s on many corporate servers and let your imagination run wild.

Keep in mind also that the discovery of machines that are open to remote desktop connections in this way can be entirely automated. It’s trivial code wise plus there are dedicated tools to discover risks within the organisation (Nmap is a good starting point).

Open folder shares

This one is particularly nasty in that it’s dead easy to expose a heap of data. This, of course, is by design:

The Network folder provides handy access to the computers on your network. From there, you can see the contents of network computers and find shared files and folders.

That’s not going to be news to most people, but when you put that in the context of an enterprise with a large number of machines and trusting colleagues eagerly sharing documents with each other and the risk profile changes substantially. That risks manifests itself in two ways:

- Folders that are shared by design as central points of collaboration i.e. “All our team docs for project foo will go into this folder”

- Folders that are shared by individuals on their own machines i.e. “Hey Joe, here’s the video of the company offsite meeting on my PC”

Now extend the open folder share problem to the application space. There are way too many software developers out there still deploying it wrong and using the good old copy and paste deployment paradigm followed by a healthy dose of hand-editing config files. Folder shares make that really, really convenient and what makes them even more convenient is when they’re not constrained by those pesky permissions that are always getting in the way of people doing their job. Open shares to resources like website roots not only expose copious amounts of source code, we’re also talking about all sorts of confidential information like credentials and in many cases, troves of documents stored on the file system by the app.

Oh – and just like with the remote logon rights on servers, this is information that’s discoverable in an entirely automatable fashion.

Weak credentials

You will no doubt have password requirements within your organisation which look something like this:

“All passwords must be a minimum of 6 characters long and include at least one lowercase character, one uppercase character and one number or symbol.”

As a result of this, your organisation inevitably has a big whack of passwords like this:

Acme01

Ah, but you need to change it every three months! Consequently, there will also be many passwords like these:

Acme02

Acme03

Acme04

That’s usually at the Active Directory level but let’s not stop there. There are enough cases where you’re looking at user-driven passwords for other resources such as external services. Consider a scenario like this:

Not a great look if you’re Burger King and whilst that attack vector has never been publicly disclosed, it’d be a really safe bet to suggest that it boiled down to the credentials used for the account. And this is one of the real problems we face now – every day folks in the organisation suddenly hold the keys to valuable corporate assets yet they apply practices that even in a personal use capacity, are highly questionable. Apply them to the corporate environment and now you’re really raising the stakes. For example, here’s how Sony Pictures’ CEO handled his passwords:

The emails show CEO Michael Lynton routinely received copies of his passwords in unsecure emails for his and his family's mail, banking, travel and shopping accounts, from his executive assistant, David Diamond. Other emails included photocopies of U.S. passports and driver's licenses and attachments with banking statements.

Frankly, the most bizarre thing about this is that he needed an assistant to remind him of his passwords, couldn’t he just store them insecurely himself?! Which brings me to the next point…

Insecure password storage

One of the nasty practices that came up with Sony was passwords stored unencrypted on the files system in a folder called… passwords. This is a great example of where we ridicule them for their practices (and rightly so), but it’s a very safe bet that if you’re inside an organisation of any reasonable size, you’ll find exactly the same thing if you ask around.

The problem with passwords being stored like this is very much a social one. You have multiple people working together who may well legitimately need access to the same resources using the one identity. A corporate social media account, for example. Where do you think your org stores their credentials for their public faces on Twitter and Facebook? Almost certainly not in any form of enterprise password management facility. There will be exceptions, of course, but you cannot beat the simple convenience of passwords in a text file or a word doc.

Go and ask your marketing folks or your corporate affairs folks or whoever manages these accounts – “where do you store your passwords?” – and see what sort of response you get. In all likelihood, it won’t be pretty…

We software developers have our own issues

I talk to a lot of software developers from all walks of life and I reckon I see a good cross section of practices. In our defence (and certainly I’m one of the guys that has to deal with these problems too), we’re often working with multiple systems with large volumes of data and responding to demanding customers. We don’t always have good security answers to the challenges we’re faced with, at least not without investing further effort up front and making compromises on time or money.

A perfect example is the prevalence with which production data is sucked down into test environments. Yes, there’s a performance problem in production you want to reproduce and yes, it’s easy to just take a copy of the database but stop and think about what this means. Those excessive access rights because it’s just a test environment suddenly load production data. Those connection strings everyone had access to because “it’s only test” now pull real customer records. It goes on and on and there are good solutions out there to work around the risk, yet we somehow still end up with sensitive data at our disposal.

As developers, the nature of the systems we’re building and may have excessive access to can contain serious amounts of data and as developers, we know very well how to pull 10 million records out onto a local machine “just for testing”. But we’ll delete it when we’re finished testing, honest…

Corp mail is probably not the best place for racist or homophobic rants

Well actually, racist or homophobic rants are really best not had in the first place, but when they’re on the corp mail then whether it’s intentional or not, they’re representing your views within your capacity in the enterprise. You start cracking racist jokes at the president’s expense and it may well not end up well for you.

It’s not just getting your mail servers owned that’s the problem, email has this habit of being very transportable and easily redistributable. This year we had a prominent professor down here who was otherwise a smart bloke except for his penchant for trying to outdo a mate with racial slurs via email. Despite the idiocy of the content, it was probably reasonable to assume that it should have remained a private conversation, yet here we are with the guy now out of a job.

So consider that in your own environment – how many “private” emails have you or those around you sent that would be explosive in a Sony-style way were they to be disclosed? As with sensitive data of other kinds, you can apply a really simple rule to email: you cannot lose what you do not have. Show some restraint and that’s one problem you won’t be dealing with.

Now try getting something done about it

Here’s the sobering reality about all this: getting anyone to care is hard. Actually I’ll rephrase that as Sony cares a great deal right now: getting anyone to care before an event happens is hard. Everyone is really busy and Joe’s got that deadline and Sally said she’d come in under budget for the year and well, it would take effort to fix this stuff!

This is the eternal paradox we face in security. We hypothesise about risks such as the ones above and try to convince the powers that be that a security incident would be a rather nasty affair but we can’t precisely say how likely it is nor how nasty it would be. We ask people to redirect effort and dollars from more immediately tangible initiatives in the hope that if all goes well, there’ll be nothing to show for it other than the absence of an incident.

What have you seen?

I’m genuinely interested in this and I’d love to hear about the sorts of practices you’re seeing inside organisations that are setting them up to become the next Sony in terms of data leaks. Obviously use some decorum and withhold names as you see fit, but some independent perspectives would be a useful addition to this post. Comment away!